This article highlights the reasons for a tactical view, its creation, and its evolution where we use the BEPRO ecosystem to our advantage.

Football Broadcast

When we think of a football match on a screen, we have a television broadcast in mind.

The camera is operated by a human, and the production decides on the images we ultimately see.

However, if you want to view the game from a tactical point of view, you face a problem: The camera determines what you see.

The video follows the action — and who would have thought it — it mostly takes place where the ball is. But to observe the game like a tactical analyst, you can’t just follow the ball. Because what happens on the ball is only a small part of the whole picture.

In fact, most of the time there are over twenty players on the field who are not actively interacting with the ball. What are they doing? Where are they moving? What position or area do they occupy? In fact, the actions of these players are often more important than the situation directly on the ball, as they will most likely determine the next move.

Panoramic Video

Unlike football on TV, our camera(s) capture every moment of your game. Our panoramic video covers the entire playing area for the entire length of the game. The 3D video player allows the user to become the camera operator and determine the view of the game — Read more about it here.

Automatic Tactical View

Operating the camera yourself is fun, but it doesn’t allow you to fully concentrate on the overall gameplay. This is where our AI-Generated Tactical View comes into play.

The automatic camera movement allows our users to follow the exciting action of their team while assessing every tactical subtlety on the field.

BEPRO’s Tactical View is defined in such a way that as many field players as possible can be seen at all times in the camera’s angle of view. Certain exceptions to exclude players, e.g. the goalkeeper or players far behind, enable the best possible perspective without losing the overview of the tactical formation.

From Pitch to View

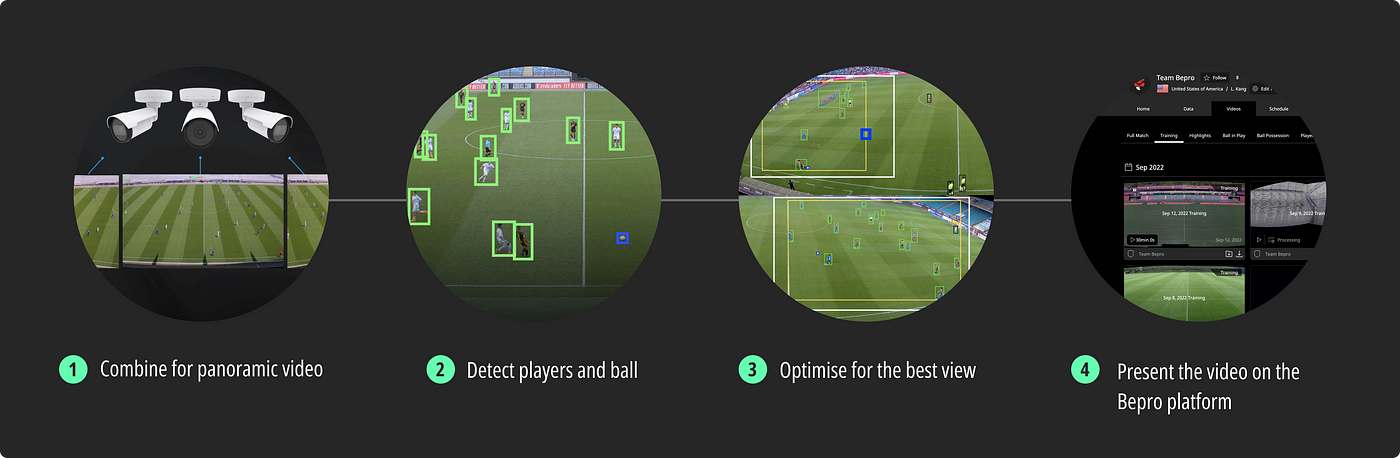

A quick summary of how the Tactical View is created as a final result:

- Create a panoramic video from the video streams of each camera

- Perform object detection (player and ball) with an AI model

- Determine the best view position based on the accumulated information

- Presentation of the result on our platform and provision as a video for download

Points 2 and 3 are the most relevant for creating the Tactical View, so let’s look at these in more detail.

Object Detection

In order for the camera to show the ideal section of the entire panorama (video), we need to assess what is happening on the field, and in order to do that we need to know where the players and the ball are. To obtain this information, we make use of object detection technology.

The first sentence on Wikipedia sums it up nicely:

“Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos.“

But now the question remains how does this work? — Well, the short (and frankly inaccurate) answer is: AI!

The acronym AI is referred to as the solution for almost every problem that requires solving today, often unfairly, but let’s face it: it’s fascinating to imagine an artificial intelligence being able to solve something. However, object detection is truly an area dominated by AI, or more precisely Deep Learning.

We use a custom-designed model, more precisely a convolutional neural network (CNN). This model has been trained with millions of examples to identify objects (here player, ball and background). For the training data needed for this, we can fall back on our huge volume of recorded videos (At this point approx. 60,000 hours). In addition, we have the advantage that we not only have many data sets, but also a wide range of variety due to the completely different locations and users of our systems.

Determine View Position

The first step is to transform the position of the detected objects from the image coordinate system (position in the video image) to the world coordinate system (position on the playing field). The necessary calculations can be derived from the position of the camera in relation to the playing field. After the location of the objects on the playing field have been determined, each instance goes through a series of filtering steps to minimise detection errors.

The actual view of the camera is defined by a set of rules and operations based on the player and ball position information.

We call these definitions the Tactical View Logic.

The Logic then decides which players are relevant and which can be excluded in favour of a better camera perspective. For this purpose, various pieces of information are utilised, such as the distance between the identified players or the distance to the ball, as well as its general position.

In addition, various indicators are calculated to evaluate the current state of the game and adjust the view accordingly. The final step of the logic is to use the information gathered to determine the best possible image section and transfer it into a seamless camera motion.

Introducing Version 2.0

The first version of Tactical View has been around for quite a while and has received constant updates. Over time, many minor improvements and bug fixes have been implemented, but to achieve a big leap in quality, sometimes it’s best to start with a blank sheet. And that’s exactly what we did.

We have redesigned the structure and also the logic of Tactical View from the ground up.

In such a redesign, the initial goal is to recreate the quality of the original version. However at the same time we also aim to increase the efficiency, comprehensibility and modularity of the code by scrutinising every single line. After a certain level of imitation has been reached, the next phase begins — the student becomes the master.

Questioning the existing logic frequently produces completely new approaches, which can now be quickly introduced and tested thanks to the improved structure of the code. Compared to the original version, these are a few highlights that have been improved or developed:

- Faster camera response time

- Improved alignment of the camera to reduce blank backgrounds

- New method to exclude players to allow the best possible perspective

- Enhanced effectiveness of filtering mechanisms to avoid false detections

- New feature to assess the gameplay based on the player’s movements

- New feature to detect and capture corner kicks

- Novel method to identify critical situations with goal potential

Evaluation — Taking Advantage of BEPRO’s Ecosystem

The concluding subject of this article deals with one of the most important points in the development of the Tactical View, which is the assessment of quality. To clarify this matter, two things must first be established:

- There is no universally valid definition of the ideal Tactical View

- The quality of the Tactical View is largely subjective

Faced with these facts, you’re probably wondering: how one can evaluate and improve something that is not strictly defined and highly subjective? Our solution is: data!

Remember the millions of examples we extract from our videos that we use to train our AI to identify objects? We also perform detailed analysis of the games for our all-in-one ecosystem.

We use our analysed event data to convert the subjective quality of the Tactical View into numbers that can be compared.

The most important information from this data is the position of the events. By referencing each action exactly on the playing field, we can determine if the event is visible in the tactical view. This is the basic requirement and the starting point of any improvement. However, in order not to miss any event, the virtual camera should not show the entire playing field, but just as much as necessary. For this purpose, the zoom level and the position of the Tactical View can be evaluated. In addition, the number of directional changes of the camera should be kept as low as possible to provide a pleasant viewing experience.

However, the actual experience of focus and camera movement is still subjective and perceived differently by each user. That’s why we are constantly striving to improve our Tactical View.

Example Scenes

The following videos show clips of improved situations detected by the automatic tactical view evaluation. The previous version is on the left and the new version is on the right.

.png)